We built a microservices architecture for e-commerce integration using ASP.NET Core to provide the foundation for a system that efficiently transforms data between supplier systems and Shopify. Here's how we did it.

Ever tried syncing a dozen systems - all with different data formats and integration technologies - and felt like you're herding cats? Yes, us too. That's why we built MeldEagle - a SaaS platform that takes the chaos out of e-commerce data integration. We're talking about ingesting data from manufacturers and wholesalers in whatever format they throw at us, transforming it, and syncing it seamlessly with Shopify. And we did it all with a microservices architecture using ASP.NET Core hosted in Azure.

I'm Kat Korson, Director at Red Eagle Tech, and I'm here to spill the beans on how we made it happen. From wrangling massive product catalogues to dodging Shopify's API rate limits, we've got stories - and solutions - that might just save you a few headaches. So, grab a brew, and let's dive into the nuts and bolts of building a system that's as robust as it is user-friendly.

It all started with my team and I speaking to Shopify store owners who were struggling to integrate data from multiple different vendors, and in many cases doing this in time-consuming, manual ways. Our solution automatically ingests data in whatever format vendors offer, transforms and enriches it, and then synchronises everything with Shopify. Users are offered one easy-to-use web interface to view and manage everything.

In this article I share with you how we built the microservices architecture that made this possible, and what lessons we learnt along the way. If you are new to microservices and want to understand the fundamentals first, start with our complete guide to ecommerce microservices architecture.

Why we used ASP.NET Core 8 as the foundation

Choosing the right tech stack for Meldeagle was crucial to its success. First and foremost, we believe the best development tools are the ones your team already knows well. Our engineers have extensive experience with C# and the .NET ecosystem, which meant we could hit the ground running and focus on solving the complex integration challenges instead of wrestling with unfamiliar technology. This is pretty important when you're handling product catalogues with thousands of items and updating inventory in real-time.

But it's not just about sticking with what's comfy; ASP.NET Core has the chops to back it up. The tooling is spot on for modern DevOps practices. We set up CI/CD pipelines with GitHub actions, meaning every release gets built, tested, and deployed automatically. Add in infrastructure-as-code using Bicep, and it means we've got our environments sorted, with consistent and reliable deployments from development right through to production. It's the kind of setup that lets us sleep soundly at night.

Cost-effectiveness played a role in our decision as well. ASP.NET Core can be hosted on Linux infrastructure within Microsoft Azure, which offers a more economical option compared to traditional Windows hosting. This cross-platform capability means we can pass these savings on to our clients while maintaining the performance and reliability they expect.

We also decided to play it smart by sticking to Long Term Support (LTS) versions, like ASP.NET Core 8.0. This keeps us bang up to date with the latest features and security patches, but without the headache of constant refactoring you'd get with short-term support releases. It's a bit like having your cake and eating it - staying current without the chaos.

Breaking it down

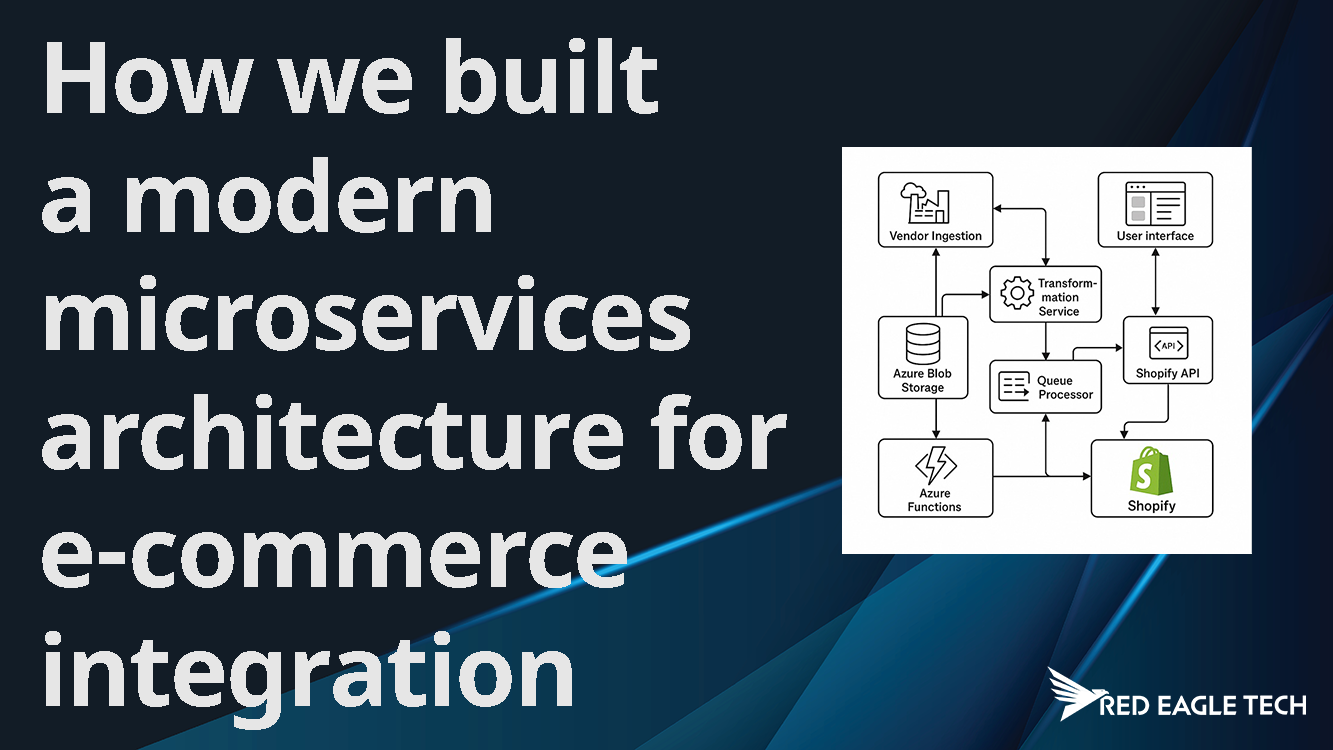

We made the choice to split our system into focused microservices, instead of building one large monolithic application. These are:

- A Web application handling the user interface, configuration, and orchestration of services when that's needed based on our user's actions.

- Supplier data adapters - dedicated microservices for each supplier data format - yes, naturally, they're all different and have their unique quirks!

- Shopify integration services - separate services for reading from and writing to each Shopify data type.

We did this because it meant that we could scale each component independently based on its specific load, our engineers could develop and deploy services without affecting the whole system, and when supplier formats changed (which of course they did) we only needed to update the relevant adapter.

We also decided to implement these microservices as a mix of Azure Function apps and Web apps, depending on the functionality required. This meant that we could use event-driven architecture and only pay for actual usage. This was a good option because unpredictable spikes in data processing activity are quite common in e-commerce integration.

Data transformation patterns

Mapping data between the different systems was probably one of the most difficult parts of this integration. To tackle this, we decided on several different design patterns:

ETL pattern with staging tables

We used a classic Extract-Transform-Load pattern, but with a key difference: staging tables acted as checkpoints between phases.

In the Extract phase, we pull data from supplier systems into staging tables. For instance, our Oxford Products adapter extracts product information and stores it temporarily in a structured format.

During the Transform phase, we run multiple processing steps:

- Enrichment processes add brand-specific configurations and standardise data formats

- Category mapping translates supplier categories to Shopify's taxonomy structure, using Artificial Intelligence to remove most of the manual grunt work

- Variant generation creates appropriate product variants based on options like size and colour

Finally, in the Load phase, the processed data is published to Shopify using their API, with careful handling of rate limits and error conditions.

This staged approach meant that processing from checkpoints could be resumed if a step failed, transformation progress could be tracked in real-time and detailed error handling is provided at each stage.

Repository pattern for data access

Rather than accessing the database directly, we abstracted data operations through repositories. For example, our product repository provides methods to retrieve products for a specific brand, store products in staging tables, and get staged products ready for processing.

This approach gave us the flexibility to change our data access strategy without affecting business logic and made unit testing much easier.

Challenges we faced and how we solved them

It's always good to point out the key challenges in a journey like this because from challenge comes learning. And there's always a lot of challenges in coding. Here's a few we faced and how we went about solving them:

1. Handling large product catalogues

Some of our clients have multiple thousands of products with many images, variants and metafield objects per product. And some configuration changes that users make can result in us needing to update every product in the entire range. Of course this is pretty normal for most large multichannel or e-commerce retailers, but it would create issues if we'd used an old-fashioned batch processing approach.

So, we solved this by implementing a queue-based system that processes updates in real time, grouping changes into small batches to make the processing as fast as possible. So instead of trying to process the entire catalogue at once, we break it into manageable chunks of 100 products per batch. Each batch then moves through the pipeline independently. From a user point of view, this all happens like magic in the background, and they can see the live status as products are processed and loaded into Shopify in real time.

We also used Azure Blob Storage for product media files like images and user manuals - this took up less memory and improved the processing speed, as the images were referenced by URL rather than passed through as binary data.

2. Concurrency control

We discovered that multiple processes could attempt to update the same products simultaneously. Such as if our client and one of their product vendors both make a price change at the same time.

We solved this by implementing a first-in, first-out system that will allow both updates to take place, even though the first price change is still being processed when the second price change is made. Users can see the progress of the updates in a transparent way and are notified once everything has been loaded successfully into Shopify.

3. Shopify API rate limits

Shopify's API has fairly strict rate limits that are easy to hit with large catalogues, and these limits also vary depending on the client's Shopify plan.

We solved this by implementing adaptive throttling that adjusted based on Shopify's GraphQL API response – Shopify's rate limits are based on a 'query cost' and employ a 'leaky bucket' replenishment algorithm. Our system monitors the rate limit information returned by Shopify with each API response. This indicates how many points the last query cost, and how many points we have remaining. We keep each individual request small relative to the overall size of the bucket, and as we start to run out of points, we automatically slow down requests. This allows the service to reach an equilibrium that loads data into Shopify as fast as their API allows.

Security

In the modern digital age, security is absolutely essential - especially for a SaaS product like Meldeagle that handles sensitive e-commerce data. At Red Eagle Tech, we're committed to protecting our users and their information with robust, practical measures. Here's how we've built security into our platform:

- Strong authentication: We enforce complex passwords and require multifactor authentication (MFA) for all accounts. This adds a critical layer of defence against unauthorised access, keeping user data safe.

- Server-side operations: All sensitive tasks - like handling API keys or processing critical data - are kept strictly server-side. By avoiding client-side exposure, we reduce the risk of interception or misuse, a simple yet vital step we've all seen startups overlook to their peril.

- Established frameworks: We leverage trusted, open-source tools like Microsoft Identity Framework and Duende Identity Server for authentication and authoridation. These battle-tested solutions save us from reinventing the wheel, delivering proven security we can build upon.

- Regular updates: We stay proactive with security by scheduling regular patches and updates for our systems and dependencies. It's built into our sprint plans - an overhead worth every minute to stay ahead of vulnerabilities.

Steps like these help to safeguard our users' data and to position us as a startup that takes security seriously. For us, it's not just a feature - it's part of our value proposition. In a world where security is sometimes an afterthought, we believe good security can be a point of differentiation.

Front-end performance optimisation

Sound backend architecture is of course super important for processing data efficiently, but we also needed to ensure a great user experience by optimising the frontend performance of our web app. So, we implemented the following:

Critical CSS loading

We used Critical CSS to improve initial page load times, which meant:

- Identifying the CSS needed for above-the-fold content (what users see immediately)

- Inlining this critical CSS directly in the HTML head

- Loading the remaining CSS asynchronously

Hosting our own client-side libraries

We host all our client-side libraries - like Bootstrap, fonts, and media player components - directly on our own cloud infrastructure. This decision ensures we're always confident in the speed and reliability of our web app. By serving these assets ourselves, we eliminate dependency on third-party CDNs, which can introduce delays or inconsistencies. Our users get fast, predictable load times, no matter their location. Plus, hosting everything in-house gives us full control over versioning and updates, ensuring nothing breaks unexpectedly. It's a simple strategy that keeps performance in our hands and enhances the overall user experience.

Using Azure Front Door

We've implemented Azure Front Door as the gateway for all traffic to our infrastructure, leveraging its powerful features to boost both performance and security. Static assets - like images, CSS, and JavaScript - are cached at the edge, cutting down load times for users. We pair this with ASP.NET's asset version control to guarantee no stale assets are served, keeping everything fresh. Security gets a big lift too, thanks to Azure Front Door's built-in DDoS protection and web application firewall (WAF). And here's the key: all traffic must go through Azure Front Door - there's no alternative route to our infrastructure. After all, having a fortified front door is pointless if attackers could simply slip in via a side entrance.

Data caching

Our solution is pretty data-heavy, so the web app often needs to retrieve data from the back-end database. To speed this up, we use pre-caching on the server side, so most of our pages are served to the user instantly.

Together, these optimisations helped us to improve our PageSpeed scores from an average of around 60 to 90+, making for a great user experience and happy clients.

Responsive design with Bootstrap

We made use of the Bootstrap CSS framework, with custom SCSS to get away from that classic 'Bootstrap' look, and to match our brand colours and design ethos, which helped towards having a consistent, responsive UI without reinventing the wheel. Although it's been around for a while, we still highly rate Bootstrap for its ability to accelerate development and produce high-quality responsive user interfaces without over-complicating things. Our UX designer worked with the developers to create a system that maintained consistency across components, while saving development time.

What did we learn from this?

Coding's a wild ride, and building Meldeagle taught us a ton - some lessons we saw coming, others hit us like a rogue bug in production. If we were starting fresh, here's what we'd do differently from the start:

- Open the curtains for users: We started out with logs and monitoring tools that only our engineering team could see - a classic approach. Then we flipped the script and let users peek under the hood, but in a measured, thoughtful way that's genuinely useful to them. Real-time insights into what's humming along (or not) built trust like nothing else. Whether it's a sync in progress or a data tweak, showing users the action cuts confusion and support tickets. Start with user-facing transparency - your engineers will thank you, and your users will stick around.

- Always drop release notes: We thought release notes weren't that interesting to users - we were wrong. Sharing them with every deployment - without fail - turned out to be a trust goldmine. Sure, not everyone reads them, but when a fix or feature matters to them, users hunt for it. Make those notes detailed and searchable, and you'll save everyone time while shouting, "Hey, we're on it!" It's a small move with a big impact.

- Infrastructure as code from day one: We've used GitHub actions for automated CI/CD code deployment from day one. But early on, we had a gap around cloud infrastructure deployment - we burned hours manually creating and tweaking environments - painful. Honestly, don't do it. Then we adopted Bicep for Azure, allowing us to deploy infrastructure as code, and it's brilliant. Consistent setups across dev, staging, and prod? Check. Automated infra deployments that work while you're making a coffee? Double check. Skip the manual mess - tooling like this is too good to sleep on.

- Cache like you mean it: We were shy about caching at first, worried about server strain. Big mistake. Once we went aggressive - think configuration data, product data, and even live, real-time status - page loads became instant. Users noticed, and we think it set us apart from the sluggish cloud tools they're used to wrestling with. A little extra resource cost for a snappy experience? Worth it if you ask us. Speed's the name of the game.

These aren't just tech hacks - they're how we learned to build something users don't just tolerate but actually enjoy. And yes, we're still figuring it out - there will be plenty more lessons to come.

Conclusion

After a lot of planning, numerous iterations and all the valuable lessons we learnt along the way, we're proud of MeldEagle and how it's helping to make Shopify store owners' product management workflow easy, and - dare we say it - enjoyable.